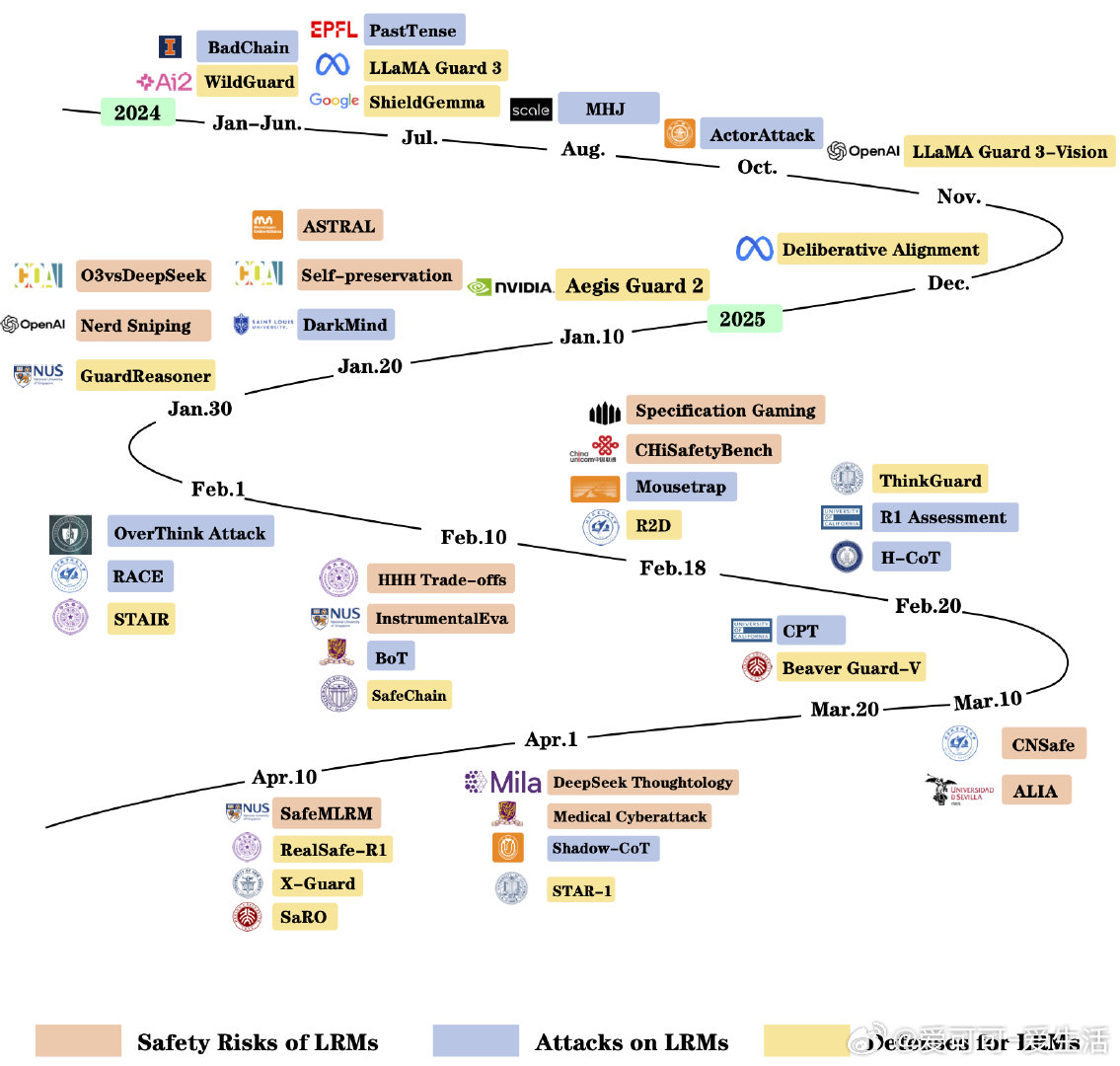

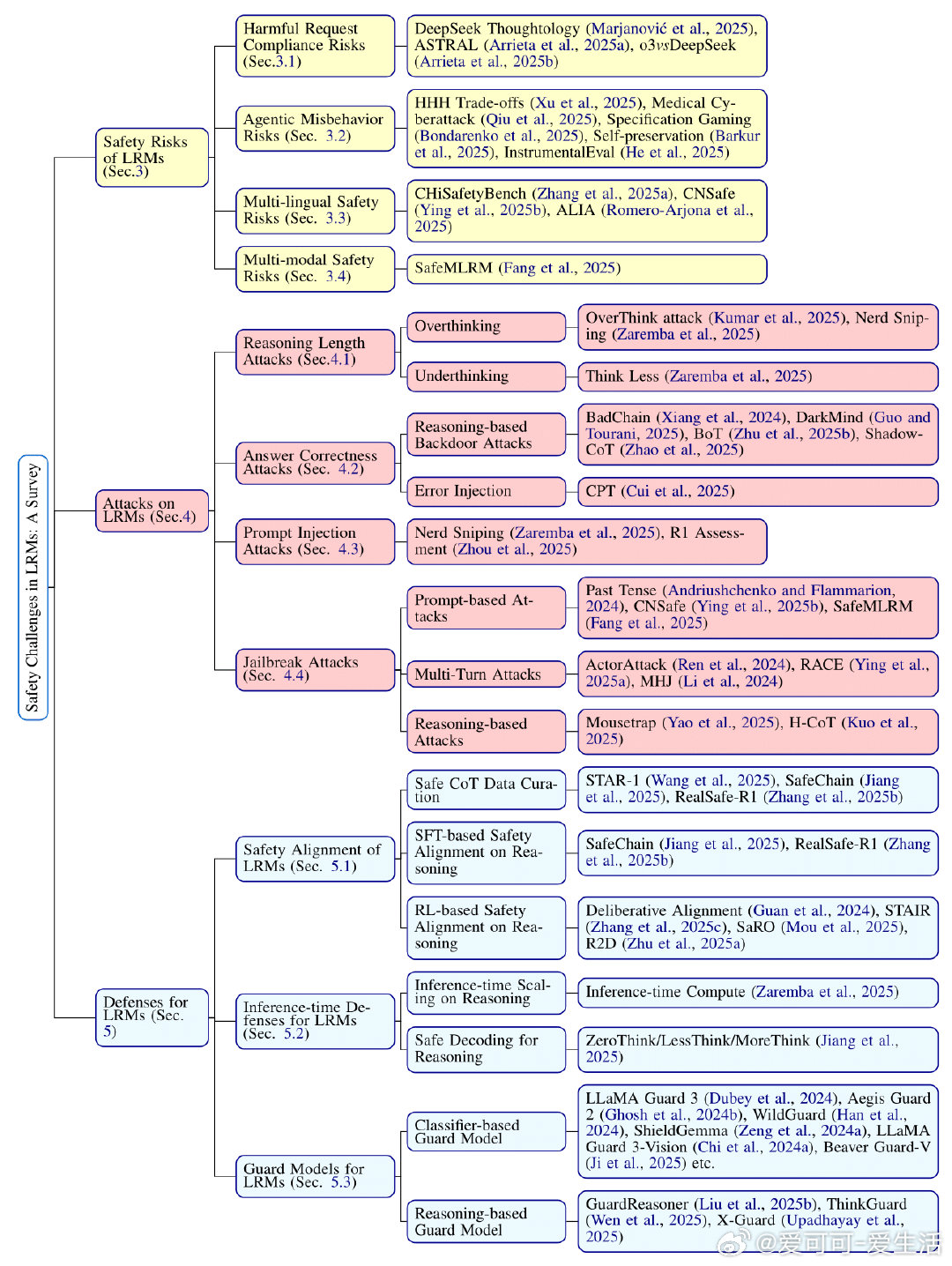

【[50星]Awesome-LRMs-Safety:专注于大推理模型安全性的精选论文合集。亮点:1. 涵盖多维度安全风险,包括有害请求、多语言、多模态等;2. 提供前沿攻击与防御策略,助力研究人员应对挑战;3. 持续更新,紧跟领域发展动态】

Awesome LRMs Safety

This repository contains a carefully curated collection of papers discussed in our survey: "Safety in Large Reasoning Models: A Survey". As LRMs become increasingly powerful, understanding their safety implications becomes critical for responsible AI development.

GitHub: github.com/WangCheng0116/Awesome-LRMs-Safety

大推理模型 AI安全性 学术资源 人工智能 ai兴趣创作计划